Everyone’s shouting about AI like it’s magic. We’re not here for that. We wanted to see what happens when you stop treating AI like a chatbot and start building with it like a tool.

So we’re using GPT4All—an open-source, local LLM runner—to build our own assistant. Not to make content. Not to pretend it’s human. But to see how far we can push an offline, fully controlled LLM system into something real.

This isn’t a review. It’s a build log.

Why GPT4All?

- No API fees

- No surveillance

- Total control over model choice

- Works offline, runs on local hardware

It’s not the most powerful. But it’s yours. And that makes it interesting.

What We’re Building (WIP)

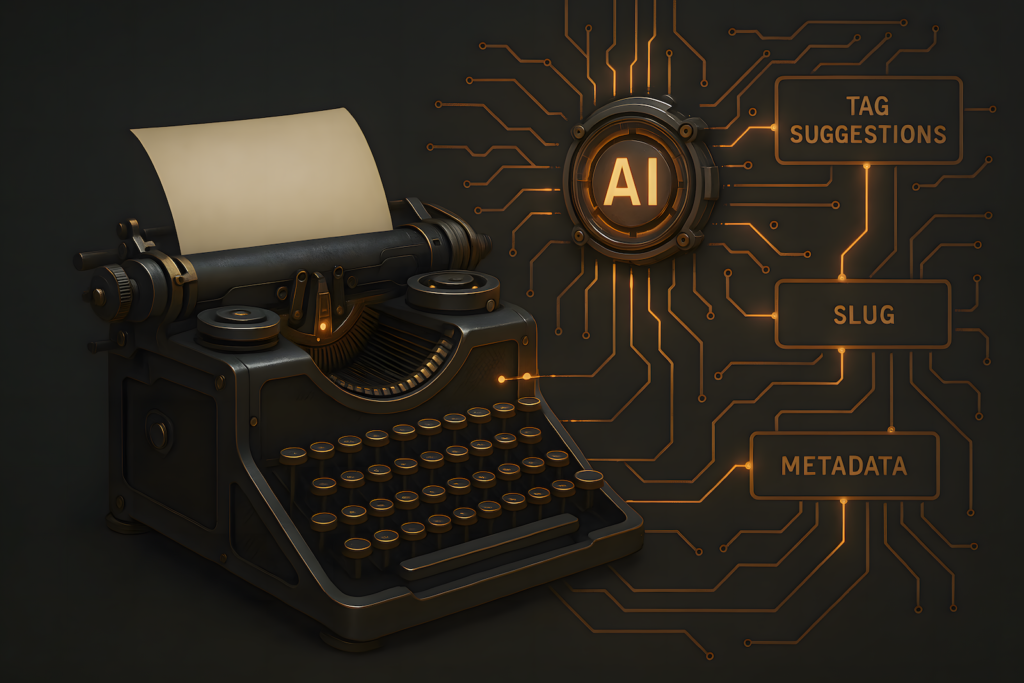

A lightweight, locally running assistant that can:

- Read and reference our blog vault

- Suggest tags, slugs, and SEO data

- Generate structured drafts

- Run basic content sanity checks (keyword usage, duplication, etc.)

- Do all of the above with no internet connection

Not a chatbot. A task-specific, high-context writer’s tool.

The Stack Tool links for those who want to build alongside:

- GPT4All – open-source platform for running LLMs locally

- Ollama – lightweight container system for local LLM inference

- LlamaIndex – RAG framework for building context-aware prompts from your data

- ChromaDB – fast local vector DB for embedding retrieval

- HTMX – progressive enhancement JS library for frontend interactivity

- Flask – Python microframework for routing and APIs

Build breakdown:

- Model: Mistral 7B via Ollama

- Backend: Flask API with context memory

- Frontend: Lightweight web UI (HTMX + Alpine.js)

- RAG: LlamaIndex + ChromaDB (to load our content vault)

- Hosting: Local only (Mac Mini, Ryzen 7 dev box, etc.)

We’ll version this by feature milestone. First: blog vault ingestion + prompt scaffold.

Where GPT4All Breaks Fast We tried:

- Direct test case generation → inconsistent

- Structured JSON output → brittle

- Long prompts across turns → limited unless we chunk carefully

But with prompt injection + templates, it works—barely. You have to think like a framework, not a chat user.

Why This Is an EAI Fit This isn’t about “Can GPT replace your job?” It’s about:

- Can you build a focused tool from an open-source model?

- What breaks?

- What’s worth automating?

We’ll document every step. And no, we won’t pretend it’s magic.

V1 Bootstrapping: SEO Draft Assistant (Done)

Real Example: Internal Link Suggestion That Actually Helped We ran a test on a placeholder title: “Why Your Home Office Setup Kills Your Focus”. The assistant parsed it, matched it against existing content in the vault, and returned anchor suggestions pointing to:

- Our post on tactical workspace gear (RWH)

- A burnout systems piece from MomentumPath

- A sleep-structure article from HealthyForge

It didn’t generate new text. It just pointed out which internal content clusters were contextually linked based on actual vault embeddings. That saved us about 10–15 minutes of manual search and reduced the chance of missing deep posts from early 2024.

Was it perfect? No. Did it hallucinate? Surprisingly, no. Every link was valid and context-aware.

We started simple. The first working version loads a local copy of our blog vault, processes content into embedded chunks using LlamaIndex, and lets us query it through a local web UI powered by HTMX. When we enter a working title or a primary keyphrase, it:

- Retrieves relevant past content (via semantic match)

- Suggests a working slug based on structure

- Generates a proposed meta description

- Pulls reusable tags from similar posts

- Suggests internal link anchors based on vault context

This all happens locally—no OpenAI, no API call latency, and no hallucinated nonsense from random training data.

How it’s wired:

Ollamaserves the Mistral 7B modelLlamaIndexdoes vault ingestion + context injectionFlaskhandles request routing and context bundlingHTMXrenders the results into the browser without JS bloat

Does it still need polish? Absolutely. Is it production-ready? No. But it works. And it’s ours.

Alternate Path: You Don’t Have to Use Python Everything we’ve built so far runs on a Python-based backend (Flask, LlamaIndex, etc.). But GPT4All doesn’t lock you into Python. It serves a REST API. That means you can build your assistant stack using Node.js, Go, or whatever tech you actually prefer.

The JavaScript Path (If You Hate Python) You can build a completely JS-based assistant using:

- Node.js + Express for your backend

- React, Svelte, or even HTMX on the frontend

- Call

http://localhost:11434/api/generatedirectly usingfetchoraxios

Same result: generate prompts, handle context, pull results. All local.

The Tradeoff? Python has libraries like LlamaIndex, ChromaDB, and embedding tools ready to go. If you want blog vault embedding and RAG-style search, Python is still faster to prototype. JS gives you more flexibility if you’re already deep in the web stack.

Next up: bootstrapping the tagging logic and exposing YAML + front matter export. That’s where we’ll see how reliable this gets at scale—and how much hand-holding the prompts still need.