AI tools are everywhere. They’re writing blogs, generating code, optimizing SEO, and flooding inboxes with “thought leadership” that sounds impressive until you actually read it. Everyone’s using them. Few are reviewing the output.

Most teams trust what AI gives them, as long as it doesn’t throw an error. That’s like letting a junior dev push to production because “the build passed.”

That’s where I come in.

The Work That Eventually Got a Label

While some folks are busy asking AI for “10 productivity tips,” I’m reviewing GPT output line by line—finding hallucinations, fixing logic, and cutting the fluff. Not because it’s trendy. Because someone has to make sure the output won’t embarrass a real product.

AI Content Reviewer is a job title now. Funny thing is—I’ve already been doing it.

Here’s what that actually looks like:

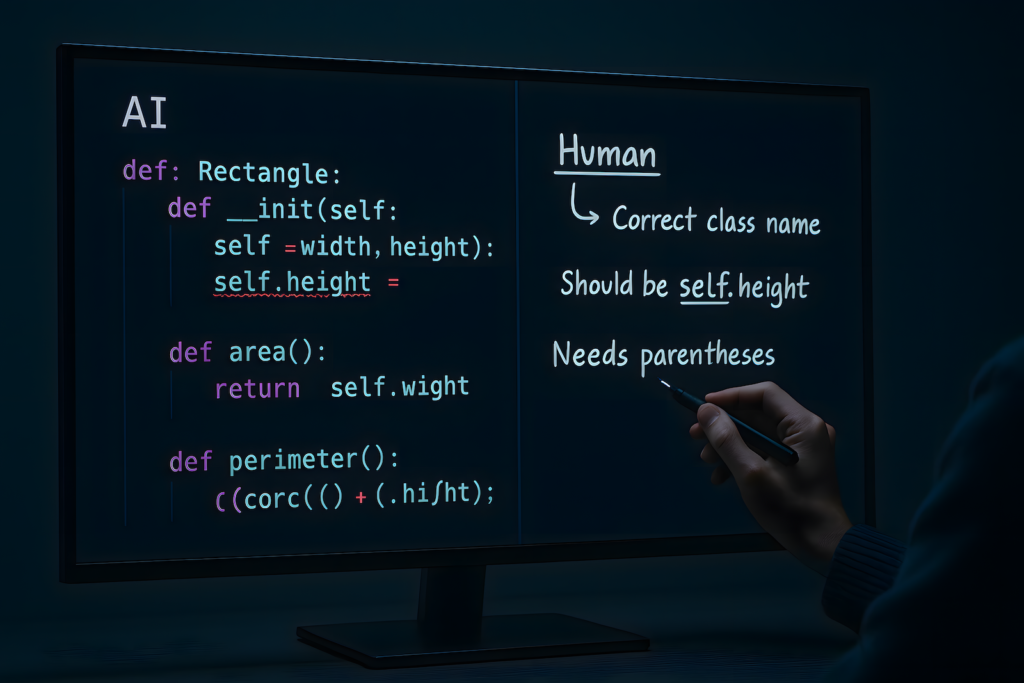

- ❌ Catch hallucinations – AI invented fake metrics. I deleted them.

- 🔍 Cross-check facts – GPT cited a 2023 update that never existed.

- 🔧 Debug code output – Claude gave broken logic. I fixed it line-by-line.

- 📉 Trim bloat – Most AI content is 50% filler. I cut it down to what matters.

- 📚 Rephrase without the hype – No “unleashing potential.” Just clean insight.

I don’t use AI to save time. I use it to remove friction. There’s a difference.

How I Debug Prompts Like Code

The shift is subtle but critical—especially for teams that think AI can replace senior devs or writers. We’re not debugging code anymore. We’re debugging the prompt that generated it.

Here’s how that played out in my recent workflow:

🔗 Vibe Coding with AI: How We Went from Debugging Code to Debugging Prompts

The bug wasn’t just in the code. It was in the way the AI misunderstood what we actually needed—because the prompt was too shallow, too vague, or built on flawed logic. And that kind of bug doesn’t show up in terminal errors. It shows up in misaligned output and wasted time.

QA Perspective: AI Doesn’t Know Context. I Do.

You can’t just plug AI into a QA workflow and expect it to replace testers. It’ll pass anything that looks “okay” on the surface—and fail to catch the logic underneath.

That’s why we still test manually. That’s why we pair human context with AI output.

🔗 Debugging in QA: Why Manual Testing and AI Assistance Still Matter

I’ve had AI tell me everything was green—while the actual user flow broke after three clicks. That’s not intelligence. That’s statistical guesswork.

Remote Work Reality: This Isn’t Just Theory

All this happens while managing multiple blogs, building long-form content pipelines, and syndicating across platforms—all from a remote setup.

I operate under CTRL+ALT+SURVIVE (CAS), a survival-focused content syndication brand that includes:

- RemoteWorkHaven – practical remote work systems

- MomentumPath – burnout recovery and mental structure

- HealthyForge – tactical health and survival

Our content process isn’t some templated AI auto-blast. It’s a layered system:

- Posts start with a problem, not a keyword.

- Drafts get rebuilt, optimized, and reviewed—often multiple rounds.

- Each post passes through logic cleanup, SEO structure, and format QA.

- Final drafts are manually linked, image-optimized, and tested before going live.

- Syndication follows after: reframed, not reposted.

If AI is involved, it’s as an assistant—not the driver. It helps where it can. I cut what it gets wrong.

That discipline extends to every AI workflow I run. Especially when the AI gets it wrong.

Portfolio: Reviewing AI Where It Fails Most — And What’s Coming Next

A few of the places where I’ve publicly reviewed, dissected, and rebuilt AI output:

- 🔗 AI SEO Content Is Killing the Web — breaking down why “optimized” AI content is rotting relevance from the inside.

- 🔗 Prompt Engineering Without the Hype — teaching people to write prompts that don’t waste dev time.

- 🔗 Why Over-Relying on AI Is Weakening Developers — where AI is used as a shortcut, critical skills decline.

These aren’t posts. They’re production audits. Structured teardown, fix-first mindset, and zero tolerance for vague output.

And this is just the beginning.

As AI expands beyond ChatGPT and Claude, we’re already exploring newer tools:

- Coding copilots like Cursor, Codeium, and Ghostwriter

- AI CMS experiments that try to automate editorial processes

- Image generation tools that need human prompt control to avoid nonsense outputs

The goal’s the same: expose what’s broken, test where it helps, and cut through the noise.

We’re starting a focused round of real-world tests across:

- Claude 4 for technical writing and code validation

- Gemini inside Google Workspace for doc rewrites and SEO briefs

- Perplexity AI as a research assistant for sourcing and fact-checking

- Runway & Veo for lightweight video workflows

- Midjourney and Firefly for branded image production

- Suno & ElevenLabs for voiceovers and narrative prototyping

- Notion AI & Zapier AI for process automation and documentation cleanup

Each tool gets the same treatment: test, teardown, and rebuild. If it fails, we show why. If it works, we show how far you can actually trust it.

This isn’t hype. It’s just QA—applied to AI, end to end. Whether it’s LLMs writing words, drawing images, or generating code—we treat it like production. Because if it ends up in front of users, it’s not a prototype anymore.

That’s how I treat AI workflows—even when no one’s paying me to.

Coming Soon: What Survived Our Tests

We’re already compiling detailed breakdowns of every tool in our AI testing batch—what passed QA, what broke in real-world workflows, and what’s actually worth using.

If you’re trying to separate hype from working systems, keep an eye out. We don’t just list tools—we tear them down.

👉 Stay tuned for the teardown.

This Isn’t a Pitch. It’s a Pattern.

No outreach campaign. No badge. No bullet-point résumé.

Just the receipts: reviewed code, cleaned up hallucinated logic, shipped posts, and flagged what most teams let slide. Across multiple platforms, across real-world workflows. Not theory—just practice, done repeatedly.

This isn’t ambition. It’s pattern recognition. The work’s already being done—just without the name tag.